Building a "Zero-Cost" Multi-Agent Meal Planner

Recently, I've been diving deep into the world of AI agents and LLMs. I wanted to build something practical: a tool that could "read" my Ghost blog, understand my recipes, and generate a weekly meal plan without me having to lift a finger.

But I had a constraint: it had to be zero-cost. No expensive managed databases, no $20/month LLM subscriptions. Just pure, lean engineering.

For the interface, I chose Telegram. Why? Because it's one of the few platforms that still lets you create bots and access a powerful API completely for free. It's the perfect "frontend" for a project where the goal is $0 operational overhead.

Here is the story of how this project evolved from a messy single prompt into a structured multi-agent system.

TLDR - The main lesson

If you're building with LLMs, split the brain. Don't ask one prompt to handle strategy, formatting, and math simultaneously. By creating a "Handover" pattern between a Strategic Analyst and an Executive Chef, I reduced hallucinations and built a system that actually understands how to cook for a family.

The "Aha!" Moment: Why one agent isn't enough

When I started, I had a single prompt. I'd feed it my recipes and say, "Give me a plan." It was a mess. The AI would forget my kid hates spicy food, or it would suggest cooking a 2-hour lasagna on a busy Tuesday lunch.

I realized that a single prompt was suffering from cognitive overload. It was trying to be a strategist and a sous-chef at the same time, and failing at both.

The Fix: A Multi-Agent Role-Based Architecture

I moved away from "vibe coding" and implemented a structured handover using Go.

- The Analyst (Strategy): This agent is pure strategy. It looks at the week and decides what we should cook and when. It's responsible for the "batch cooking" logic—making sure we cook on Monday so we can reuse leftovers on Tuesday.

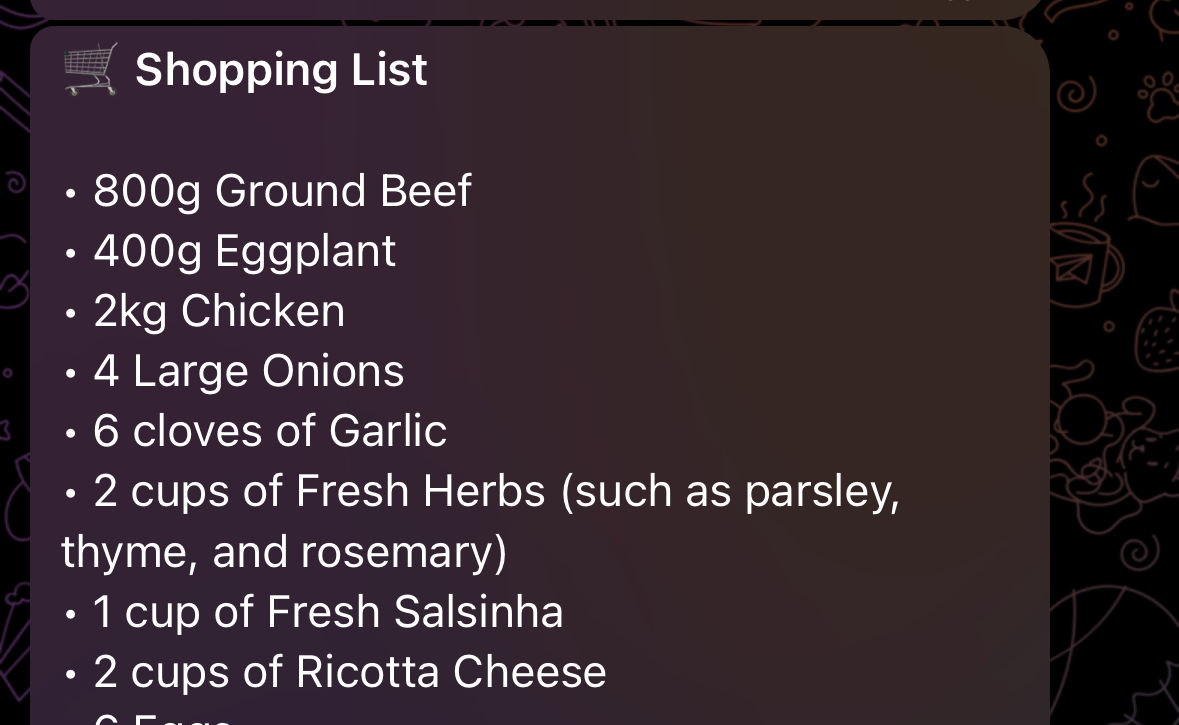

- The Chef (Execution): Once the Analyst creates a schedule, it "hands over" the plan to the Chef. The Chef doesn't care about strategy; its job is pure execution. It scales the ingredients for my household (2 adults, 1 kid) and consolidates the shopping list.

Deep Dive: The Handover Pattern

To make this work reliably, I had to define a strict contract between the agents. In Go, this looks like a shared context that ensures the Chef has exactly what it needs to succeed without re-calculating the Analyst's logic.

The "Handover" Contract in Code:

// This struct ensures the Chef doesn't have to guess.

type MealPlanContext struct {

SelectedRecipes []Recipe `json:"selected_recipes"` // From Analyst

Strategy string `json:"strategy"` // The "Why"

Household config.Household `json:"scaling"` // The "How much"

}

By isolating these concerns, the "Chef" can focus 100% of its context window on formatting and math, which practically eliminated the "hallucinated ingredient" problem.

Engineering for Zero-Cost

To keep this project truly free, I had to get creative with the stack:

- The Brains: I use a dual-provider approach with Google Gemini (for embeddings) and Groq (for fast inference). Both have generous free tiers that easily handle a daily planning session.

- The Interface: By using Telegram, I got a professional UI (chat, buttons, notifications) without writing a single line of CSS or paying for hosting a complex frontend.

- The Memory: Instead of a paid vector database, I used a Hybrid Storage approach. Recipes are stored as flat-file JSONs (perfect for RAG), and I used a pure-Go SQLite implementation for observability metrics.

- The Hardware: The entire bot runs on less than 15MB of RAM. It's lean enough to run on a home server or the smallest cloud instance you can find.

Observability on a budget

One issue I ran into was "context bloat"—the prompts getting so big they'd hit rate limits. Since I didn't want to pay for monitoring tools, I built my own metrics store in SQLite.

Now, I can just type /metrics in Telegram and see exactly how many tokens I've used and the latency of each agent. It's professional-grade monitoring for $0, delivered right to my phone.

Final Thoughts

This journey taught me that "Agentic" workflows aren't just hype—they are a pragmatic solution to LLM unreliability. By treating my AI like a small, specialized workforce rather than a single magic box, I built something that actually works for my family's daily life.

Architecture beats prompts every time.

Show me the code?

You can check out the full architecture and the Handover implementation on my GitHub.